8 Advanced parallelization - Deep Learning with JAX

Por um escritor misterioso

Last updated 05 julho 2024

Using easy-to-revise parallelism with xmap() · Compiling and automatically partitioning functions with pjit() · Using tensor sharding to achieve parallelization with XLA · Running code in multi-host configurations

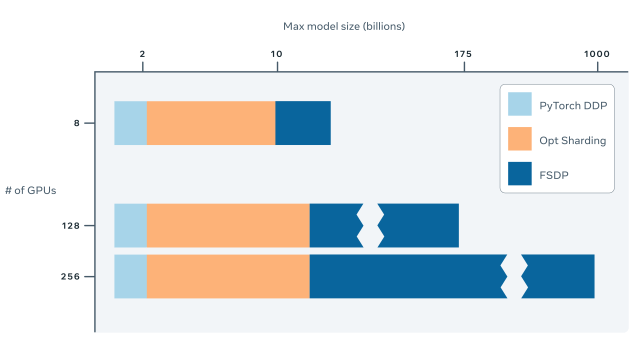

Fully Sharded Data Parallel: faster AI training with fewer GPUs

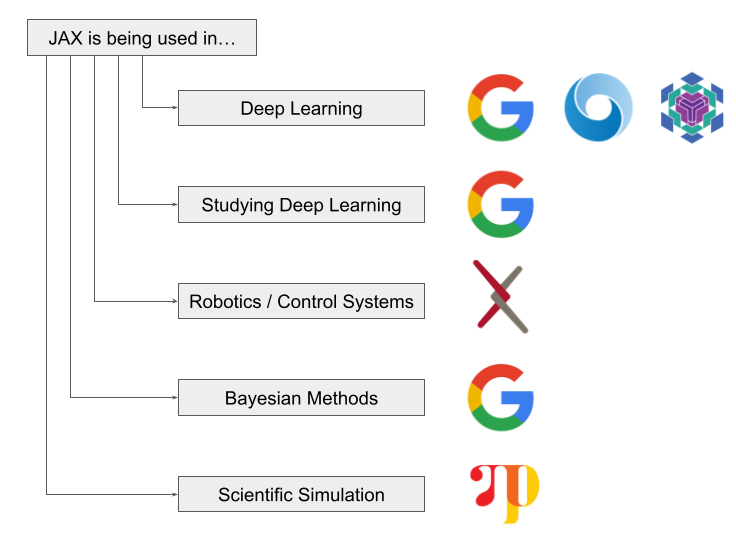

Intro to JAX for Machine Learning, by Khang Pham

Why You Should (or Shouldn't) be Using Google's JAX in 2023

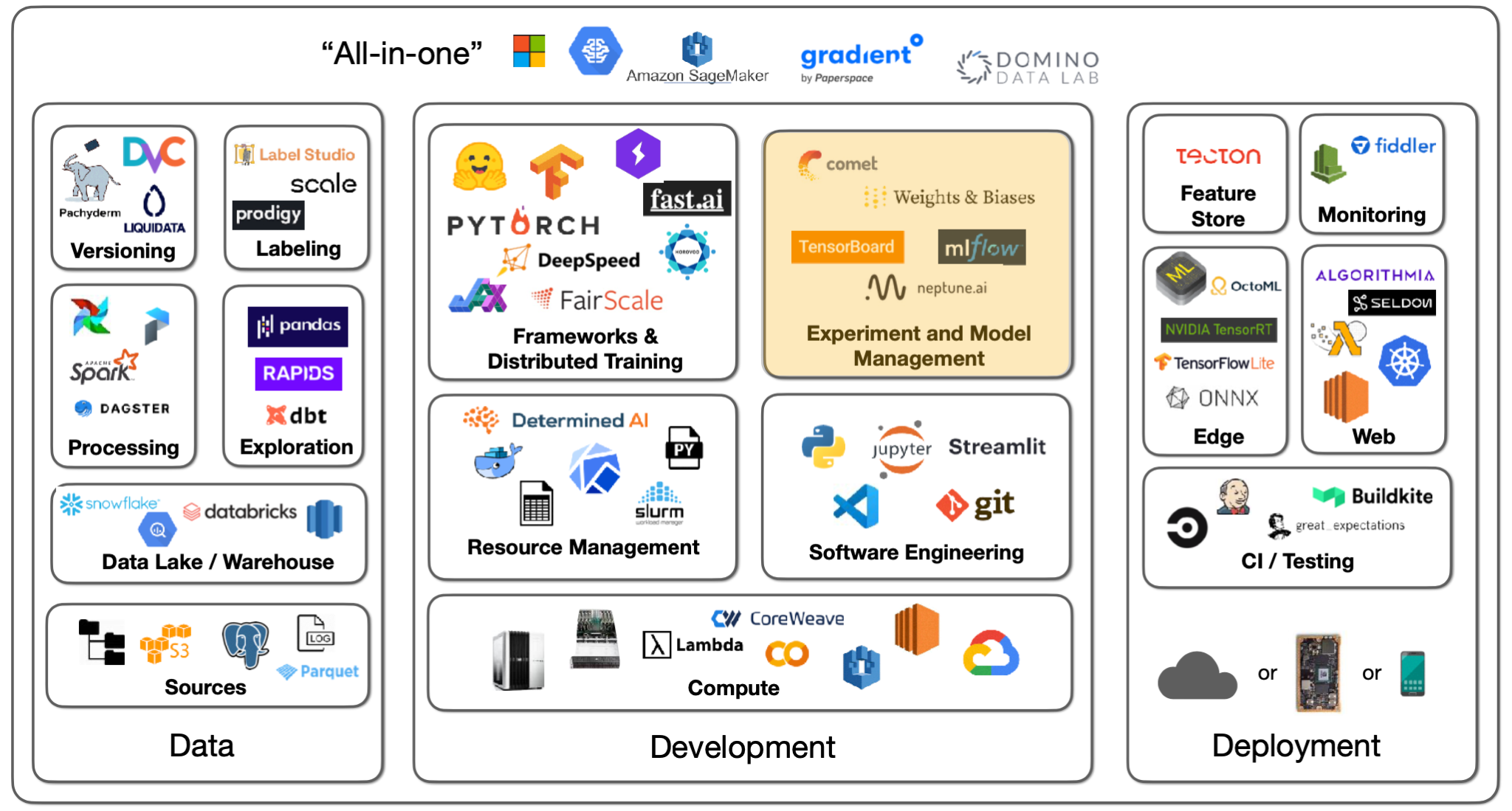

Lecture 2: Development Infrastructure & Tooling - The Full Stack

Top 11 Machine Learning Software - Learn before you regret

Introducing Neuropod, Uber ATG's Open Source Deep Learning

Deep Learning with JAX

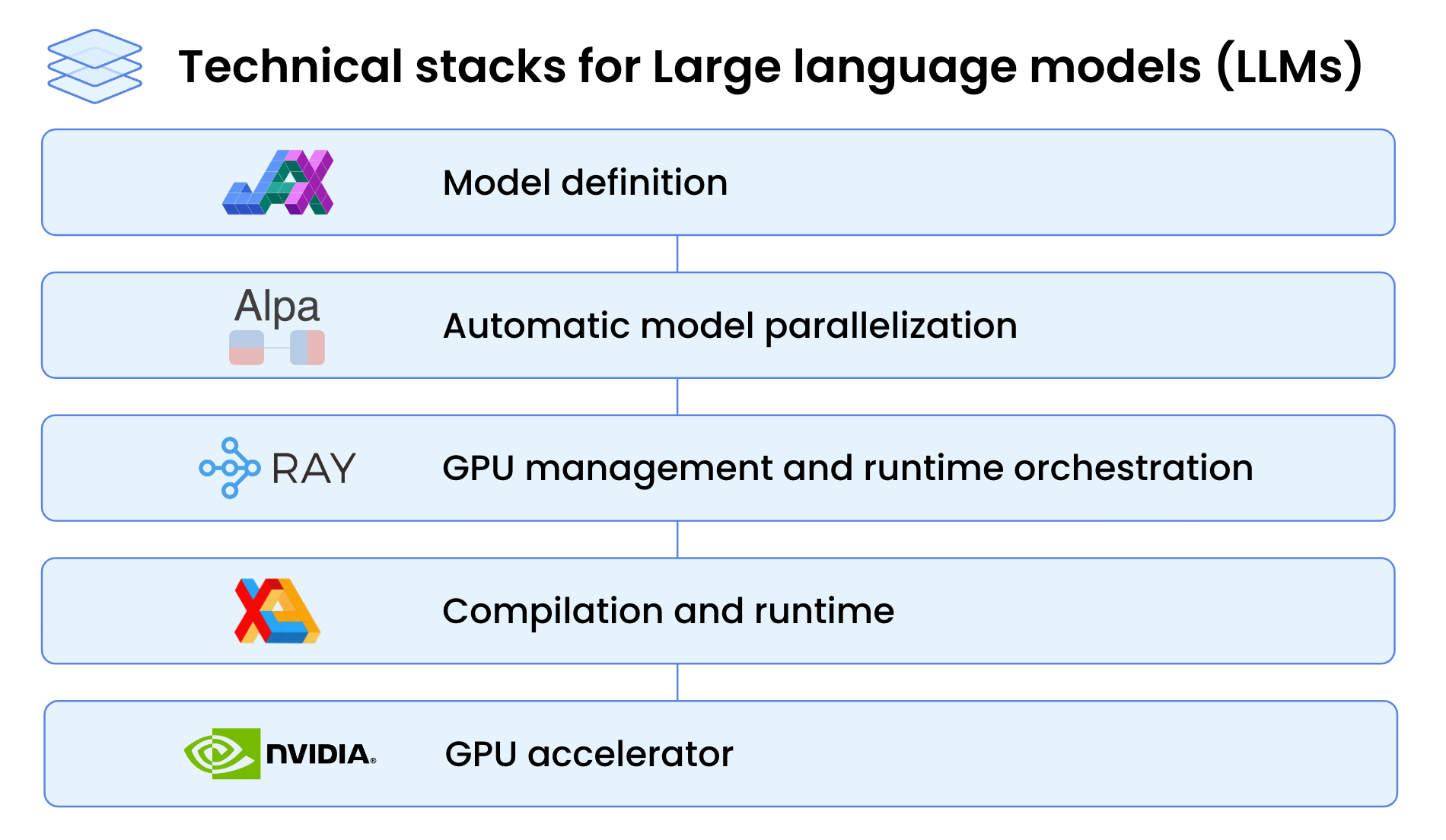

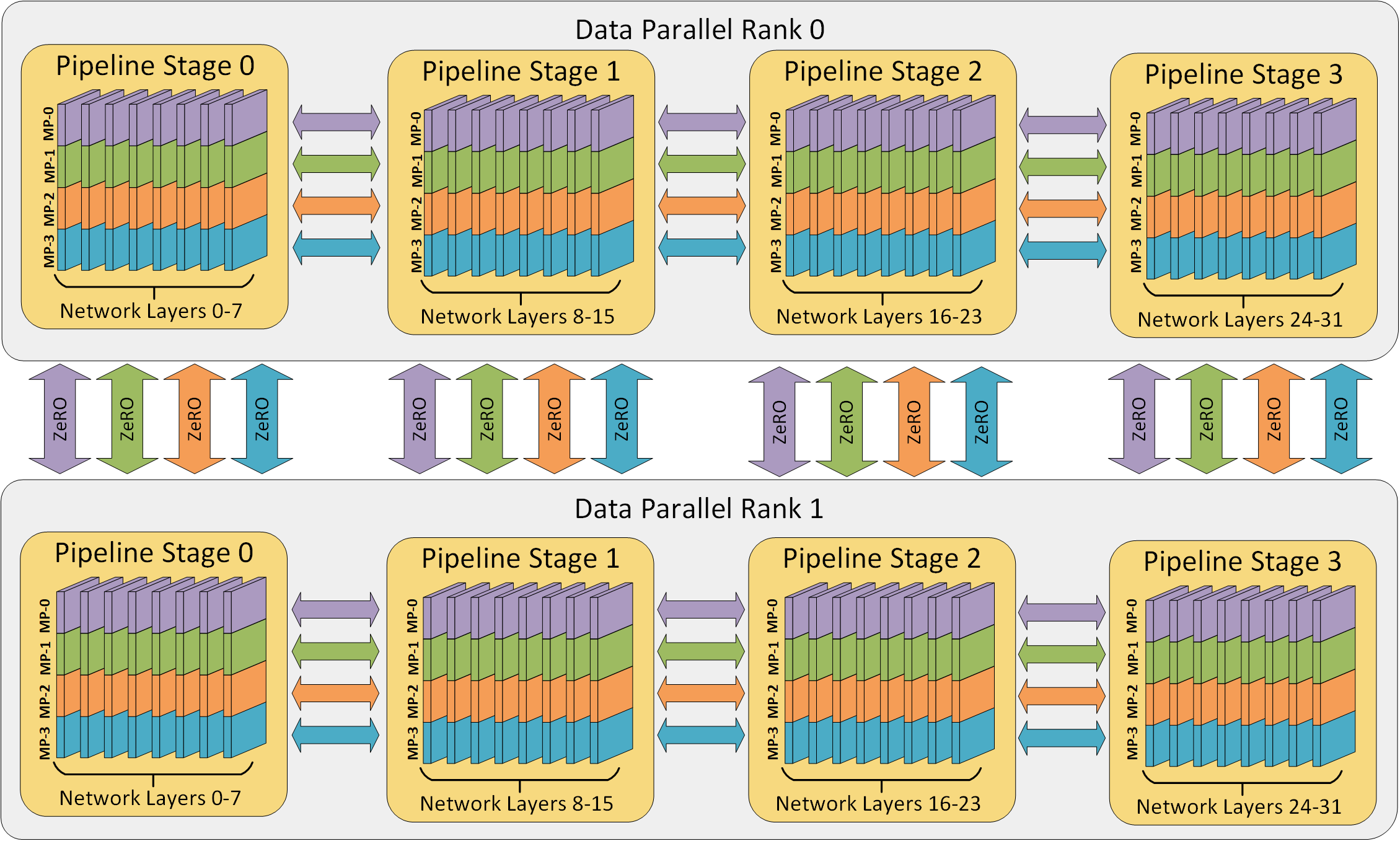

High-Performance LLM Training at 1000 GPU Scale With Alpa & Ray

A Brief Overview of Parallelism Strategies in Deep Learning

Breaking Up with NumPy: Why JAX is Your New Favorite Tool

Recomendado para você

-

How to Defeat 10,000 SCP-096 CLONES! (MULTIPLAYER)05 julho 2024

How to Defeat 10,000 SCP-096 CLONES! (MULTIPLAYER)05 julho 2024 -

Dana White Does Unbelievable Blackjack Split Against Dealer Ace In05 julho 2024

-

demo/docs/03_sparkLoad2StarRocks.md at master · StarRocks/demo05 julho 2024

-

SCP-2000 - Deus Ex Machina (SCP Animation)05 julho 2024

SCP-2000 - Deus Ex Machina (SCP Animation)05 julho 2024 -

Income tax basics05 julho 2024

Income tax basics05 julho 2024 -

Dawit Abebe, Artist05 julho 2024

Dawit Abebe, Artist05 julho 2024 -

PDF05 julho 2024

PDF05 julho 2024 -

MultiNet Installation and Administrator's Guide - Process Software05 julho 2024

MultiNet Installation and Administrator's Guide - Process Software05 julho 2024 -

Copyright © 2010 by the McGraw-Hill Companies, Inc. All rights05 julho 2024

Copyright © 2010 by the McGraw-Hill Companies, Inc. All rights05 julho 2024 -

RI360P2-QR14-LIU5X2 by TURCK - Buy or Repair at Radwell05 julho 2024

RI360P2-QR14-LIU5X2 by TURCK - Buy or Repair at Radwell05 julho 2024

você pode gostar

-

Vazou o time BRASILEIRO que o BLANKA de STREET FIGHTER torce - SBT05 julho 2024

-

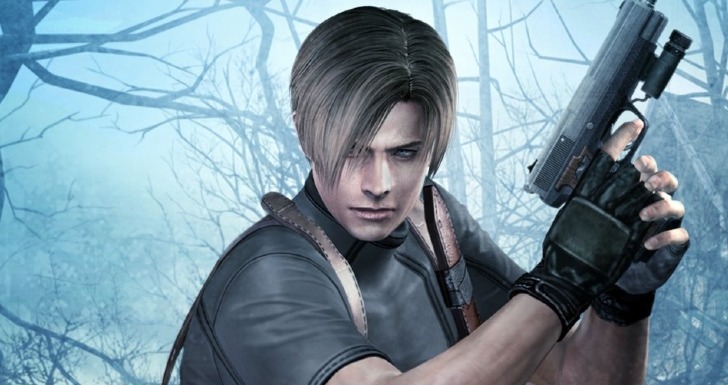

Resident Evil 4 remake tem data marcada05 julho 2024

Resident Evil 4 remake tem data marcada05 julho 2024 -

Tabuleiro de xadrez para imprimir, montar e brincar!05 julho 2024

Tabuleiro de xadrez para imprimir, montar e brincar!05 julho 2024 -

Pokemon Brick Bronze Part 2/2 by snipersteve2WasBack on DeviantArt05 julho 2024

Pokemon Brick Bronze Part 2/2 by snipersteve2WasBack on DeviantArt05 julho 2024 -

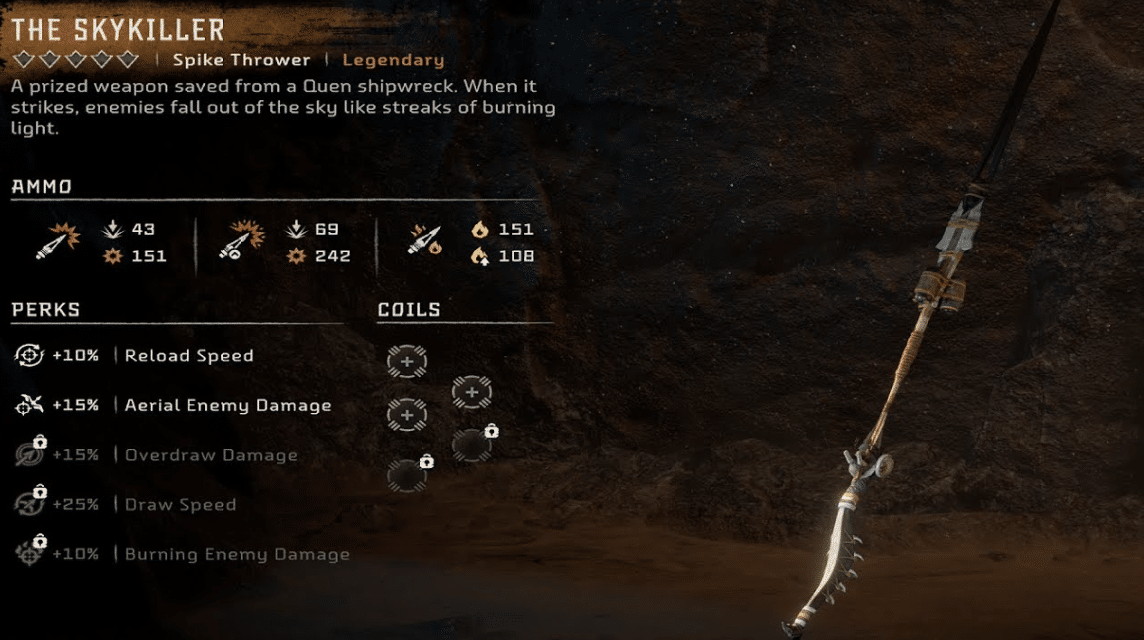

5 Best Weapons in Horizon Forbidden West05 julho 2024

5 Best Weapons in Horizon Forbidden West05 julho 2024 -

Do filme The Last: Naruto, personagens estão no jogo Naruto Shippuden: Ultimate Ninja Storm 4 - Purebreak05 julho 2024

Do filme The Last: Naruto, personagens estão no jogo Naruto Shippuden: Ultimate Ninja Storm 4 - Purebreak05 julho 2024 -

Boneca Antiga Persobagem de Desenho Animado Princesa Disney05 julho 2024

-

Awesome comic fighting - LoopAway.com Fighting gif, Stick fight, Stick man fight05 julho 2024

Awesome comic fighting - LoopAway.com Fighting gif, Stick fight, Stick man fight05 julho 2024 -

Gamemaker Studio transparent background PNG cliparts free download05 julho 2024

Gamemaker Studio transparent background PNG cliparts free download05 julho 2024 -

Fall 2019 Preview! – Random Curiosity05 julho 2024

Fall 2019 Preview! – Random Curiosity05 julho 2024